One of my favourite questions from Alan Watts is... 'What would you like to do if money were no object? How would you enjoy spending your life?' It suddenly dawned on me that I love research and learning more about technology, especially Machine learning and Quantum technology. These are the two fields that still fascinate me to date.

My Journey in tech began six years ago while still in university. I was still sceptical that securing a job in the IT industry was plausible due to the nature of my course, which lacked the mathematical background required to grasp and understand machine learning. I have to give it to Andrew Ng for his famous encouragement during his ML course, 'Don't worry about it if you don't understand.' His course was the beginning of my career in the world of Machine Learning and Natural Language Processing.

Over the six years in tech have picked a few habits along the way,

Making maths my best friend

Though the prerequisites needed for math when learning machine learning are basic linear algebra and statistics, intermediate math will be essential, especially when one wants to upscale their knowledge beyond using already implemented algorithms. And being in academia and research, this was the wisest decision I have ever made. Much of the research work requires a good knowledge of maths, as it helps in reading research papers and implementing some algorithms.

Developing a culture of reading research papers

Before exploring the world of research papers, I was more into blogs, articles, youtube videos and Coursera courses to understand Machine learning and further my knowledge. They were more intimidating at first sight, all the maths jargon, formulas, set notations and hard-to-follow pseudocode; I could not dare look it twice. But something kept bothering me, the desire to understand Perceptron at its basic fundamental level. Can I implement the neuron without the Keras and TensorFlow, just a simple Python code with NumPy or a simple Python array? There I was, scrapping through the different research papers on Perceptron, which marked the beginning of my exploration into research papers.

Coding

Writing codes is among my favourite when it comes to computers. Acquiring good standard coding practices is of utmost importance. All these skills can be gained through practising and contributing to open-source projects. Last year I learnt a lot while contributing to Ivy - The Unified Machine Learning Framework, i.e. good coding practises and writing tests, which I rarely do because most of my work involved creating and fine-tuning models.

Furthermore, as much as research papers are interesting, the beauty of it all is implementing the method proposed in those papers; this not only expands one's knowledge in the domain but also better techniques in problem-solving.

Always Keeping an open-mind

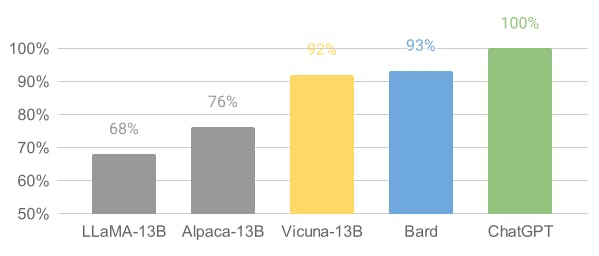

Technology is constantly evolving; there is chatgpt4 that has taken everyone by storm. Google Bard was later launched, but the level of performance did not match that of GPT4. Facebook LLaMA-13B was open-sourced. At launch, its performance was not close to Google and Chapgpt4; nonetheless, after three weeks of improvement by Alphaca-13B and Vicuna-13B, the model is almost performing at the level of Google Bard.

What caught my attention is the use of 4-bit quantization by Georgi Gerganov; to run LLaMA on a MacBook CPU, something I would love to try on my laptop.

Coming from a linguistics background, I did not struggle when it came to understanding linguistic terminology. It was fascinating that It was possible to combine what I was learning in class with NLP, i.e. sentence structures I and II. The most interesting of all was a text summary, courtesy of Gensim library, which I used to create a summarizer and feed a chunk of the novel; though sometimes the results were not promising, it was better than none, reading a 500-page novel was somehow a long feat.